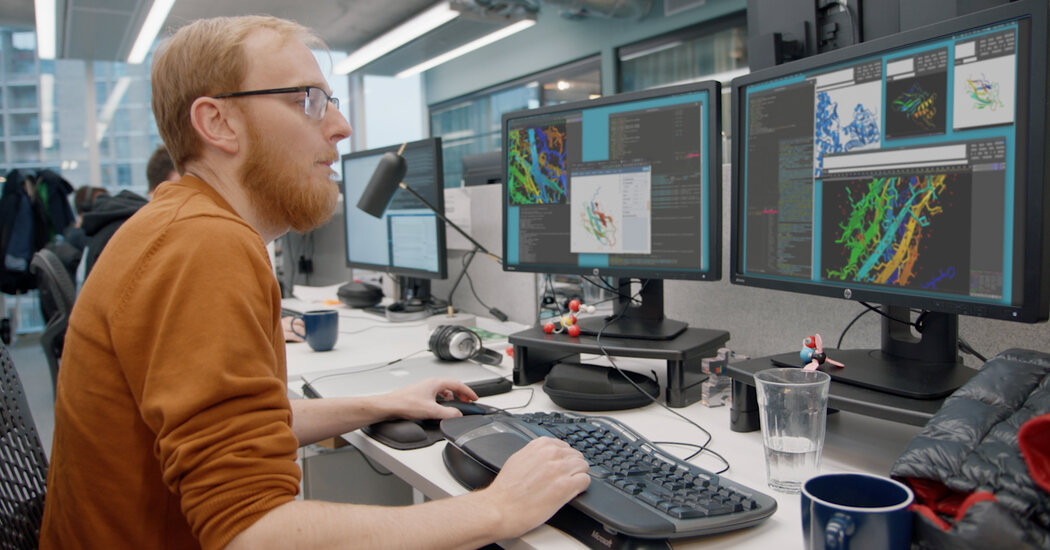

Real scalpels, artificial intelligence — what could go wrong?Sitting on a stool several feet from a long-armed robot, Dr. Danyal Fer wrapped his fingers around two metal handles near his chest.As he moved the handles — up and down, left and right — the robot mimicked each small motion with its own two arms. Then, when he pinched his thumb and forefinger together, one of the robot’s tiny claws did much the same. This is how surgeons like Dr. Fer have long used robots when operating on patients. They can remove a prostate from a patient while sitting at a computer console across the room.But after this brief demonstration, Dr. Fer and his fellow researchers at the University of California, Berkeley, showed how they hope to advance the state of the art. Dr. Fer let go of the handles, and a new kind of computer software took over. As he and the other researchers looked on, the robot started to move entirely on its own.With one claw, the machine lifted a tiny plastic ring from an equally tiny peg on the table, passed the ring from one claw to the other, moved it across the table and gingerly hooked it onto a new peg. Then the robot did the same with several more rings, completing the task as quickly as it had when guided by Dr. Fer.The training exercise was originally designed for humans; moving the rings from peg to peg is how surgeons learn to operate robots like the one in Berkeley. Now, an automated robot performing the test can match or even exceed a human in dexterity, precision and speed, according to a new research paper from the Berkeley team.The project is a part of a much wider effort to bring artificial intelligence into the operating room. Using many of the same technologies that underpin self-driving cars, autonomous drones and warehouse robots, researchers are working to automate surgical robots too. These methods are still a long way from everyday use, but progress is accelerating.Dr. Danyal Fer, a surgeon and researcher, has long used robots while operating on patients.Sarahbeth Maney for The New York Times“It is an exciting time,” said Russell Taylor, a professor at Johns Hopkins University and former IBM researcher known in the academic world as the father of robotic surgery. “It is where I hoped we would be 20 years ago.”The aim is not to remove surgeons from the operating room but to ease their load and perhaps even raise success rates — where there is room for improvement — by automating particular phases of surgery.Robots can already exceed human accuracy on some surgical tasks, like placing a pin into a bone (a particularly risky task during knee and hip replacements). The hope is that automated robots can bring greater accuracy to other tasks, like incisions or suturing, and reduce the risks that come with overworked surgeons.During a recent phone call, Greg Hager, a computer scientist at Johns Hopkins, said that surgical automation would progress much like the Autopilot software that was guiding his Tesla down the New Jersey Turnpike as he spoke. The car was driving on its own, he said, but his wife still had her hands on the wheel, should anything go wrong. And she would take over when it was time to exit the highway.“We can’t automate the whole process, at least not without human oversight,” he said. “But we can start to build automation tools that make the life of a surgeon a little bit easier.”Five years ago, researchers with the Children’s National Health System in Washington, D.C., designed a robot that could automatically suture the intestines of a pig during surgery. It was a notable step toward the kind of future envisioned by Dr. Hager. But it came with an asterisk: The researchers had implanted tiny markers in the pig’s intestines that emitted a near-infrared light and helped guide the robot’s movements.Scientists believe neural networks will eventually help surgical robots perform operations on their own.Sarahbeth Maney for The New York TimesThe method is far from practical, as the markers are not easily implanted or removed. But in recent years, artificial intelligence researchers have significantly improved the power of computer vision, which could allow robots to perform surgical tasks on their own, without such markers.The change is driven by what are called neural networks, mathematical systems that can learn skills by analyzing vast amounts of data. By analyzing thousands of cat photos, for instance, a neural network can learn to recognize a cat. In much the same way, a neural network can learn from images captured by surgical robots.Surgical robots are equipped with cameras that record three-dimensional video of each operation. The video streams into a viewfinder that surgeons peer into while guiding the operation, watching from the robot’s point of view.But afterward, these images also provide a detailed road map showing how surgeries are performed. They can help new surgeons understand how to use these robots, and they can help train robots to handle tasks on their own. By analyzing images that show how a surgeon guides the robot, a neural network can learn the same skills.This is how the Berkeley researchers have been working to automate their robot, which is based on the da Vinci Surgical System, a two-armed machine that helps surgeons perform more than a million procedures a year. Dr. Fer and his colleagues collect images of the robot moving the plastic rings while under human control. Then their system learns from these images, pinpointing the best ways of grabbing the rings, passing them between claws and moving them to new pegs.But this process came with its own asterisk. When the system told the robot where to move, the robot often missed the spot by millimeters. Over months and years of use, the many metal cables inside the robot’s twin arms have stretched and bent in small ways, so its movements were not as precise as they needed to be.Human operators could compensate for this shift, unconsciously. But the automated system could not. This is often the problem with automated technology: It struggles to deal with change and uncertainty. Autonomous vehicles are still far from widespread use because they aren’t yet nimble enough to handle all the chaos of the everyday world.From left: At the University of California, Berkeley, Ken Goldberg, an engineering professor; Samuel Paradis, a master’s student; Brijen Thananjeyan, a doctoral candidate; and Dr. Minho Hwang watched as the da Vinci Research Kit conducted the peg transfer.Sarahbeth Maney for The New York TimesThe Berkeley team decided to build a new neural network that analyzed the robot’s mistakes and learned how much precision it was losing with each passing day. “It learns how the robot’s joints evolve over time,” said Brijen Thananjeyan, a doctoral student on the team. Once the automated system could account for this change, the robot could grab and move the plastics rings, matching the performance of human operators.Other labs are trying different approaches. Axel Krieger, a Johns Hopkins researcher who was part of the pig-suturing project in 2016, is working to automate a new kind of robotic arm, one with fewer moving parts and that behaves more consistently than the kind of robot used by the Berkeley team. Researchers at the Worcester Polytechnic Institute are developing ways for machines to carefully guide surgeons’ hands as they perform particular tasks, like inserting a needle for a cancer biopsy or burning into the brain to remove a tumor.“It is like a car where the lane-following is autonomous but you still control the gas and the brake,” said Greg Fischer, one of the Worcester researchers.Many obstacles lie ahead, scientists note. Moving plastic pegs is one thing; cutting, moving and suturing flesh is another. “What happens when the camera angle changes?” said Ann Majewicz Fey, an associate professor at the University of Texas, Austin. “What happens when smoke gets in the way?”For the foreseeable future, automation will be something that works alongside surgeons rather than replaces them. But even that could have profound effects, Dr. Fer said. For instance, doctors could perform surgery across distances far greater than the width of the operating room — from miles or more away, perhaps, helping wounded soldiers on distant battlefields.The signal lag is too great to make that possible currently. But if a robot could handle at least some of the tasks on its own, long-distance surgery could become viable, Dr. Fer said: “You could send a high-level plan and then the robot could carry it out.”The same technology would be essential to remote surgery across even longer distances. “When we start operating on people on the moon,” he said, “surgeons will need entirely new tools.”

Read more →