Covid Test Misinformation Spikes Along With Spread of Omicron

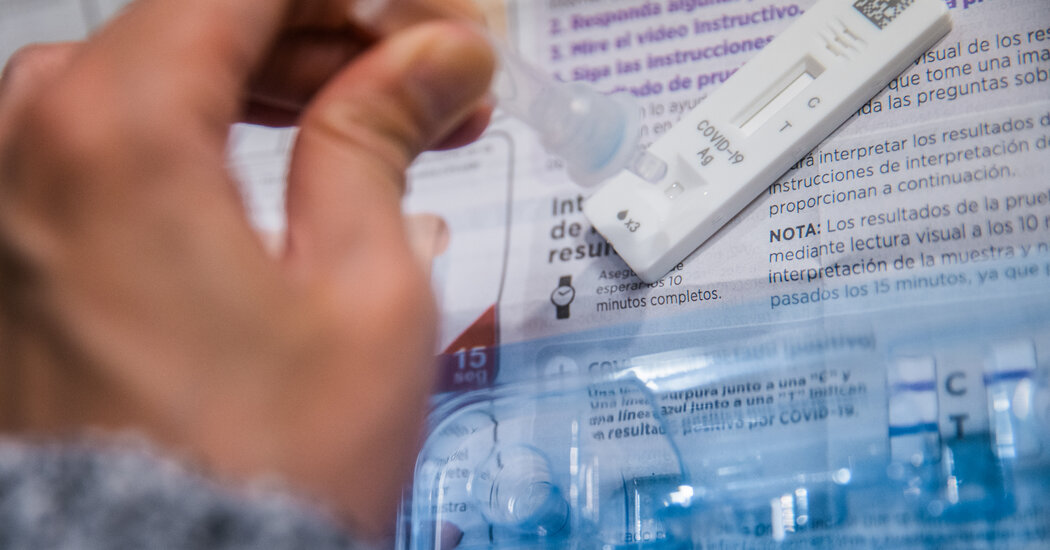

The added demand for testing and the higher prevalence of breakthrough cases have created an “opportune moment” to exploit.On Dec. 29, The Gateway Pundit, a far-right website that often spreads conspiracy theories, published an article falsely implying that the Centers for Disease Control and Prevention had withdrawn authorization of all P.C.R. tests for detecting Covid-19. The article collected 22,000 likes, comments and shares on Facebook and Twitter.On TikTok and Instagram, videos of at-home Covid-19 tests displaying positive results after being soaked in drinking water and juice have gone viral in recent weeks, and were used to push the false narrative that coronavirus rapid tests don’t work. Some household liquids can make a test show a positive result, health experts say, but the tests remain accurate when used as directed. One TikTok video showing a home test that came out positive after being placed under running water was shared at least 140,000 times.Identifying information has been redacted.And on YouTube, a video titled “Rapid antigen tests debunked” was posted on Jan. 1 by the Canadian far-right website Rebel News. It generated over 40,000 views, and its comments section was a hotbed of misinformation. “The straight up purpose of this test is to keep the case #’s as high as possible to maintain fear & incentive for more restrictions,” said one comment with more than 200 likes. “And of course Profit.”Misinformation about Covid-19 tests has spiked across social media in recent weeks, researchers say, as coronavirus cases have surged again worldwide because of the highly infectious Omicron variant.The burst of misinformation threatens to further stymie public efforts to keep the health crisis under control. Previous spikes in pandemic-related falsehoods focused on the vaccines, masks and the severity of the virus. The falsehoods help undermine best practices for controlling the spread of the coronavirus, health experts say, noting that misinformation remains a key factor in vaccine hesitancy.The categories include falsehoods that P.C.R. tests don’t work; that the counts for flu and Covid-19 cases have been combined; that P.C.R. tests are vaccines in disguise; and that at-home rapid tests have a predetermined result or are unreliable because different liquids can turn them positive.These themes jumped into the thousands of mentions in the last three months of 2021, compared with just a few dozen in the same time period in 2020, according to Zignal Labs, which tracks mentions on social media, on cable television and in print and online outlets.The added demand for testing due to Omicron and the higher prevalence of breakthrough cases has given purveyors of misinformation an “opportune moment” to exploit, said Kolina Koltai, a researcher at the University of Washington who studies online conspiracy theories. The false narratives “support the whole idea of not trusting the infection numbers or trusting the death count,” she said.The Gateway Pundit did not respond to a request for comment. TikTok pointed to its policies that prohibit misinformation that could cause harm to people’s physical health. YouTube said it was reviewing the videos shared by The New York Times in line with its Covid-19 misinformation policies on testing and diagnostics. Twitter said that it had applied a warning to The Gateway Pundit’s article in December for violating its coronavirus misinformation policy and that tweets containing false information about widely accepted testing methods would also violate its policy. But the company said it does not take action on personal anecdotes.Facebook said it had worked with its fact-checking partners to label many of the posts with warnings that directed people toward fact checks of the false claims, and reduced their prominence on its users’ feeds.“The challenges of the pandemic are constantly changing, and we’re consistently monitoring for emerging false claims on our platforms,” Aaron Simpson, a Facebook spokesman, said in an email.No medical test is perfect, and legitimate questions about the accuracy of Covid-19 tests have abounded throughout the pandemic. There has always been a risk of a false positive or a false negative result. The Food and Drug Administration says there is a potential for antigen tests to return false positive results when users do not follow the instructions. Those tests are generally accurate when used correctly but in some cases can appear to show a positive result when exposed to other liquids, said Dr. Glenn Patriquin, who published a study about false positives in antigen tests using various liquids in a publication of the American Society for Microbiology.“Using a fluid with a different chemical makeup than what was designed means that result lines might appear unpredictably,” said Dr. Patriquin, an assistant professor of pathology at Dalhousie University in Nova Scotia.Complicating matters, there have been some defective products. Last year, the Australian company Ellume recalled about two million of the at-home testing products that it had shipped to the United States.But when used correctly, coronavirus tests are considered reliable at detecting people carrying high levels of the virus. Experts say our evolving knowledge of tests should be a distinct issue from lies about testing that have spread widely on social media — though it does make debunking those lies more challenging.“Science is inherently uncertain and changes, which makes tackling misinformation exceedingly difficult,” Ms. Koltai said.The Coronavirus Pandemic: Key Things to KnowCard 1 of 6The global surge.

Read more →